Nate Silver, pictured above in a screenshot detail from a YouTube video, recently called the accuracy of media polling averages "uncanny." (Credit: YouTube)

Is the accuracy of media polling averages “uncanny,” as Nate Silver of Five Thirty Eight recently claimed on his New York Times blog?

We don’t think so.

OK…it was uncanny, perhaps, when George Gallup first showed how well-conducted polls could predict elections. But that was in 1936, almost three-quarters of a century ago, when “scientific” polls were just beginning to be understood.

Yes, it was astonishing at the time that Gallup accurately predicted FDR’s landslide reelection victory, based on a sample of just a few thousand voters, while the Literary Digest’s poll wrongly predicted a landslide for Alf Landon – though its sample included over 2 million voters!

Since then, however, we’ve come to understand how small, but scientifically chosen, samples can represent a population of over 300 million.

Since then, however, we’ve come to understand how small, but scientifically chosen, samples can represent a population of over 300 million.

We now expect (or should expect) news media polls, conducted only 24 to 48 hours before an election, to accurately predict how voters will cast their ballots – by reporting what voters say they will do! So, can’t we all agree on that point and move on?

There are problems involved in media polling these days but, in general, the major problem is not with bad predictions right before an election.

Yet, there are exceptions. The polls in the 2008 New Hampshire Democratic Primary all predicted Barack Obama would win by an average margin of about 9 percentage points, and he lost by 2 points to Hillary Clinton.

And the subsequent South Carolina Democratic Primary polls were also off by significant margins (larger than the error in New Hampshire) – wildly underestimating Obama’s margin of victory.

But importantly, those were primary elections. In general elections, party affiliation typically anchors the voters’ preferences and acts against major changes within the last several hours before the election.

So if we ignore, for the time being, the relatively infrequent times when final polls before any election prove to be far off the mark, what are the problems with news media polls today?

Two problems immediately come to mind:

- Media polls don’t give us a realistic picture of what the public is thinking on most public policy issues.

- Media polls don’t give us a realistic picture of the electorate during the campaign season. And the more time before an election, the worse the problem.

Regarding my first point, one can read the description of the most recent polling fiasco related to inaccurate policy polls. Eleven different polls (by nine organizations) came up with radically different views of the public about the Bush-era tax cuts.

According to a Pew poll, as few as 27 percent of Americans oppose extending those tax cuts for the wealthy, while a NBC/Wall Street Journal poll reports that 66 percent are opposed – an astounding difference of 39 percentage points! Now that’s uncanny! Polls by CBS, CNN, and Newsweek all found majorities opposed, while polls by Ipsos/Reuters, Fox, and AP reported less than four in ten Americans opposed. Uncanny indeed.

And, unfortunately, this polling debacle is not an aberration.

For many other examples, as well as the reasons, see how the polls varied wildly on health care, or take a look at The Opinion Makers, which includes many other examples. Or stay tuned to this PollSkeptics Report – where my colleague, George Bishop, and I will undoubtedly point to many other examples as the months unfold.

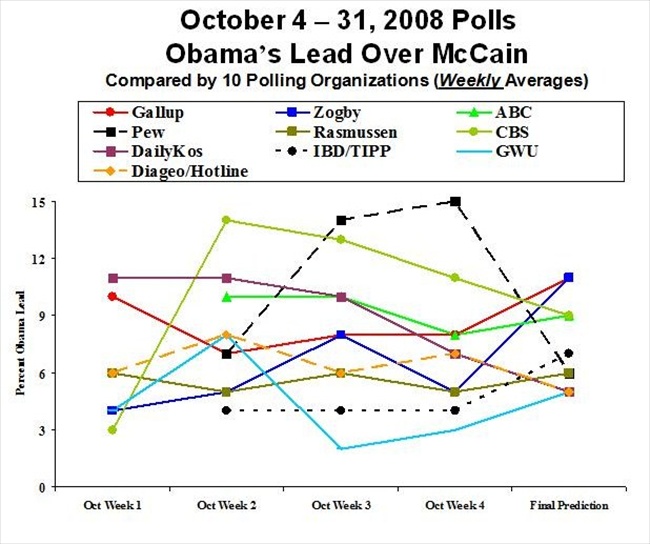

As for the second problem – polls not giving us a realistic picture of the electorate before the election – the evidence is overwhelming for the 2008 presidential election (among others – and there are others). Ten different polling organizations over the four weeks leading up to the presidential election presented a wonderful kaleidoscope of results — which all converged to a small variation in the final polls before the election. (All results were taken from pollster.com.)

|

| See above a graph of 10 polling organizations’ reports on Obama’s lead in the polls from Oct. 4- 31, 2008. |

Note that there are several different ways to interpret what happened in October, depending on what polls we might have followed during this period:

- IBD/TIPP, Rasmussen and Diageo/Hotline polls: We’d conclude that the voters essentially made up their minds by the beginning of October and made no significant changes in their voting intentions from then on.

- The CBS poll: We’d conclude that Obama had a small lead in Week 1 that surged to 14 points in Week 2, and then gradually declined over the rest of the month.

- The Pew Poll: We would come to a very different conclusion – that Obama had a modest lead in Week 2 that jumped to 14 and 15 points the following two weeks, only to collapse right before the election.

- Any one of the other polls? We would come to different conclusions about what happened in October, indicating trends that were somewhere in-between the extremes of the other polls described above.

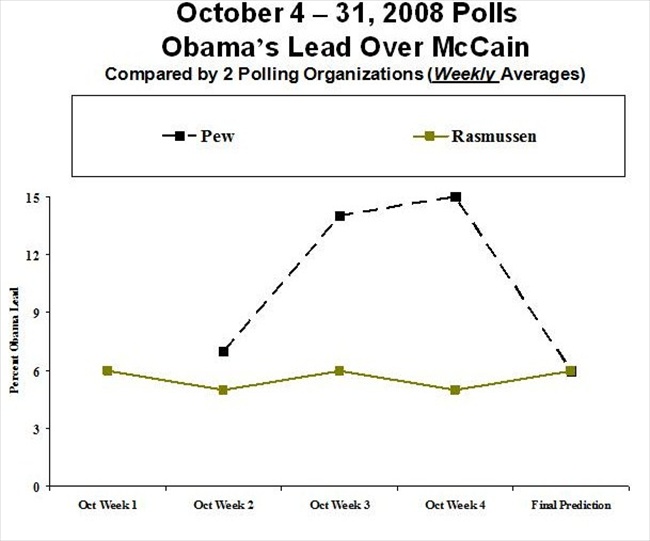

A good illustration of my point is the comparison between the Pew and Rasmussen descriptions of the October campaign: A very exciting ride, says Pew. Hardly any movement at all, says Rasmussen.

|

| See above a comparison of Pew and Rasmussen’s reports on Obama’s lead in the polls from Oct. 4- 31, 2008 |

Yes, their ultimate predictions are identical, but each polling organization’s description of the campaign dynamics during October could hardly be more different.

The above portrayals of what happened in October can’t all be simultaneously correct, of course, due to the contradictions.

Since the polls all converge by election time, meaning they all correctly predicted the winner (see the “convergence mystery” discussion that followed the 2008 election), why are there such divergences–such different and inaccurate measurements where the state of the electorate is – in the weeks leading up to the election?

Now that’s a problem worth investigating and complaining about, along with the problem of conflicting polls on policy matters.

However, most critics – like Nate Silver and Thomas Holbrook – seem to be obsessed and impressed with the great ability of polls to predict voting behavior two days away.

They apparently don’t like to look at all the contradictory findings of polls leading up to the election, or the many poll contradictions in measuring public opinion more generally.

Instead, with all the laudatory comments about how poll averages can predict elections, many poll critics leave us with the impression that because polls can ultimately predict elections, they are also good at measuring public opinion on policy matters.

But that simply isn’t the case, as we can see from the many contradictory polls on current issues.

It’s simply a fact: The predictive accuracy of polls right before elections is not a valid indicator of poll accuracy in measuring public opinion more generally.

Of course, it’s useful to know that even with changing technology and lower response rates, the polls can still accomplish the mundane. But that’s not the whole story of polling the public, or even the most important part of the story.

After all, an election is going to occur and those results will be the only “polls” that count. But on public policy issues, there is no voting outcome that will reveal what the public is thinking. Here the polls could really be useful to democracy.

That they fail so badly in this area is a problem that serious critics need to address. Instead of fixating on how well poll averages predict elections, they need to look more deeply into why polls are so “uncannily” inaccurate at measuring public opinion.

George F. Bishop is Professor of Political Science and Director of the Graduate Certificate Program in Public Opinion & Survey Research at the University of Cincinnati. His most recent book, The Illusion of Public Opinion: Fact and Artifact in American Public Opinion Polls (Rowman & Littlefield, 2005) was included in Choice Magazine’s list of outstanding academic titles for 2005 (January 2006 issue).

David W. Moore is a Senior Fellow with the Carsey Institute at the University of New Hampshire. He is a former Vice President of the Gallup Organization and was a senior editor with the Gallup Poll for thirteen years. He is author of The Opinion Makers: An Insider Exposes the Truth Behind the Polls (Beacon, 2008; trade paperback edition, 2009). Publishers’ Weekly refers to it as a “succinct and damning critique…Keen and witty throughout.”