Veteran pollster David Moore found a troubling contradiction when comparing a Pew Center’s Project for Excellence in Journalism’s report to the poll’s method statement. After two months of correspondence with Pew, the methodology statement has been changed, but the footnote “correction” only vaguely describes what really happened. Read the full story below.

* * * * * * * *

STORY HIGHLIGHTS :

|

The Pew Research Center is clearly among this country’s most prestigious sources for understanding public opinion, both in the United States and around the world.

|

It bills itself as a “non-partisan ‘fact tank’ that provides information on the issues, attitudes, and trends shaping America and the world.” A good part of its activity is analyzing public opinion polls, the results of which are widely cited in the news media. However, one of its recent poll reports about “America’s news executives,” titled “News Leaders and the Future” posted on Pew’s Project for Excellence in Journalism (PEJ) website, serves as a poster child for the way journalists should not deal with polls.

More surprising was Pew’s response to routine questions about the poll’s methodology. Instead of applying their promoted values of “full disclosure,” and complete “transparency,” Pew’s experts made contradictory and evasive statements, finally “correcting” the methodology with a revised statement that for 34 days they now, admittedly, had no idea whether or not it was true when they posted it.

This lack of transparency was especially unexpected, because two of the three Pew experts involved are national polling leaders: Pew’s director of survey research, Scott Keeter, who is also president-elect of the American Association for Public Opinion Research (AAPOR); and the CEO of Princeton Survey Research Associates International (PSRAI), Evans Witt, who is also president of the National Council on Public Polls (NCPP).

Even more ironic is that NCPP recently issued a new call for full disclosure to pollsters in describing their methodology, and AAPOR has launched a “Transparency Initiative”, which the association calls “a program to place the value of openness at the center of our profession.”

If the following tale is illustrative of the difficulties that one of the most prestigious organizations has in revealing the whole truth, the prognostication for these new initiatives doesn’t appear very promising.

The Not-So-Excellent Poll from Pew’s Excellence in Journalism Project (PEJ)

One of the more interesting news site on the web is the Pew Research Centers’ Project for Excellence in Journalism (PEJ). I’ve been particularly fond of its periodic reports on patterns of news coverage (its news coverage index), based on systematic content analysis. But there are many other interesting attractions to this site as well, which I always assumed met the project’s standard of excellence.

The article focused on the views of news executives about the future of the news industry, based on a survey conducted for Pew by PSRAI. The opening statement left little doubt that the views reported in the article were supposed to represent the views of news executives across the country:Thus, I was startled to come across a report of one of its recent polls –titled, “News Leaders and the Future: News Executives, Skeptical of Government Subsidies, See Opportunity in Technology but Are Unsure About Revenue and the Future” –that seemed to exaggerate the extent to which the results could be generalized to a larger population.

“America’s news executives are hesitant about many of the alternative funding ideas being discussed for journalism today and are overwhelmingly skeptical about the prospect of government financing, according to a new survey by the Pew Research Center’s Project for Excellence in Journalism in association with the American Society of News Editors (ASNE) and the Radio Television Digital News Association (RTDNA).”

Five paragraphs later, however, the reader learns that the survey was not of news executives across the country after all, but just the selected executives who happen to have been members of ASNE and RTDNA.

And then, readers who were really persistent, who finally clicked on the method statement, called the “topline,” at the very end of the article, would have learned that the sample of surveyed executives did not represent even those two professional organizations.

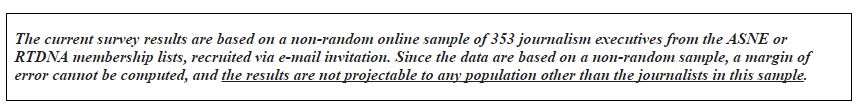

The pollster at PSRAI, which conducted the survey for Pew, made it abundantly clear that this was not a scientific poll, and the results could not be generalized to any larger population. The crucial piece of information was located at the top of the page (now deleted, and that’s part of this tale), and it said:

“Topline” method statement which makes clear that poll results cannot be generalized to “America’s editors,” as author did. The statement was bolded and italicized (I added the underline for this article), suggesting the pollster wanted to be sure Pew understood the limits of the poll’s sample.

Yikes!

This means that all of those generalizations that were supposed to reflect the views of “America’s editors” were simply wrong. According to the caveat, the sample was so skewed, the pollster couldn’t be sure that the views represented any group – other than the participants themselves.

Unlike PSRAI’s normal methodology statements, this one was bolded and italicized (I added the underline for this article), suggesting that the pollster wanted to be sure Pew understood the limits of the poll’s findings.

Pew’s Initial Reaction

I wrote to Tom Rosenstiel, the Project for Excellence in Journalism director, May 1, 2010, outlining my concerns about the inconsistency between the report (which generalized to “America’s editors”) and the methodology statement (which said no generalizations were valid). It turns out that he had authored the article and, initially, he denied there was an inconsistency: “The short answer is we didn’t intend to suggest this was a scientifically representative sample and tried to be transparent about who was surveyed,” he wrote May 3. [For the full chronological account of correspondence between Pew’s representatives and me, click here.]

Tom Rosenstiel, director and founder of Pew’s Project for Excellence in Journalism (Credit: YouTube) |

By the next day, May 4, however, he had changed his tune. In fact, he changed it three times in the e-mail: “The polling professionals here think the sample is stronger than you describe it.” [Read: It was my mistake.] “In an overabundance of caution, our methodology statement probably goes too far in discounting the extent to which our results can be generalized to the population of editors and executives.” [Read: It was the pollster’s mistake.] “We had no intention to suggest more than we believe the survey represents….” [Read: There was no mistake at all – or at least no “intentional” mistake.]

In the e-mail, Rosenstiel defended the way he wrote the article. He said he tried to be clear who was surveyed, and that Pew had been “transparent in the methodology and topline,” though he claimed that the survey was in fact “a sample of these two major industry groups as the report states.” He stated explicitly that “we [he and the polling professionals at Pew] believe that the results of the survey represent the members of the two leading newspaper and broadcast trade organizations [ASNE and RTDNA] …”

That, of course, was not what the posted methodology statement said. It said the results did not represent the views of any larger group besides the specific individuals who happened to agree to participate in the survey. Thus, according to Pew’s own posted caveat in the “topline,” Rosenstiel had no valid basis for making the generalizations he did.

As far as I could tell, this was the end of the story in their minds. I notified Rosenstiel May 7 that I was going to write a column about this matter, and he told me that “I am sorry to hear you want to persist.” He felt I was “thoroughly mischaracterizing” their report. “But you had written your opinion piece before you had gathered the facts, and from the tone it was clear you were well dug in.”

Pew Wants to Change the Methodology Statement

Actually, I wasn’t so much “dug in” as I was perplexed. More than a week after I first notified Rosenstiel of the inconsistency between his article and the methodology statement, nothing had changed on the Pew website.

One especially salient comment that Rosenstiel made in his May 4 e-mail to me was that the “polling professionals” at Pew felt the sample was “stronger” than I had described it.

Of course, all I had done was parrot what the methodology statement said, so accusing me of underestimating the strength of the sample was to fault me for reporting that the emperor admitted he was wearing no clothes.

Naturally, I wondered who the “polling professionals” were, that they disagreed with the PSRAI methodology statement. So I asked. Lo and behold! One of them was PSRAI’s CEO, Evans Witt, and the other was Pew’s Director of Polling, Scott Keeter.

If these two gurus (and I mean that seriously – they are highly accomplished polling experts) disagreed with what Witt himself had originally written, why was that statement still on the website? Were they going to change the statement? That was the question I posed to Rosenstiel.

Evans Witt, CEO of Princeton Survey Research Associates International, which conducted the poll for Pew (Credit: Twitter) |

He said “yes, they want to change the methodology statement to make it more precise and accurate.”

However, at that time, Rosenstiel said, the two experts were currently at the annual AAPOR conference in Chicago, and “are getting together to do some final tweaking there on that language.” [My italics.]

Parenthetical Comments

Let me admit that I was surprised that Pew’s and PSRAI’s reaction was to change the description of the methodology statement, rather than change the report that came after the study had been designed and implemented.

This tack was especially surprising, because Witt is a highly accomplished pollster. I thought that he must have had a solid rationale for writing the first methodology statement as he did.

The first method statement implied that the list of members provided to PSRAI was incomplete, consisting just of those members who had provided their email addresses to ASNE and RTDNA respectively (the invitation to participate in the survey was sent by email, and the survey itself was conducted online, requiring respondents to access a particular website).

It’s quite likely that most news executives would have email addresses, of course, but apparently Witt had reason to believe that a significant number of members were not on the list, either because they did not have email, or (more likely) because they had not provided their email addresses to ASNE and RTDNA to be used for such surveys.

Given the incomplete list of members, it meant Witt did not have access to all the members, nor did he know anything about the members who were missing from the list. With such a flawed list, it would be impossible to design a random sample that could be representative of the overall membership.

Thus, it was reasonable that, as he did, Witt would characterize each list (one from each organization) as a “non-random” sample of members, and thus warn Pew that it was not scientifically representative of any larger population.

Pew Fundamentally Alters Its Methodology Statement

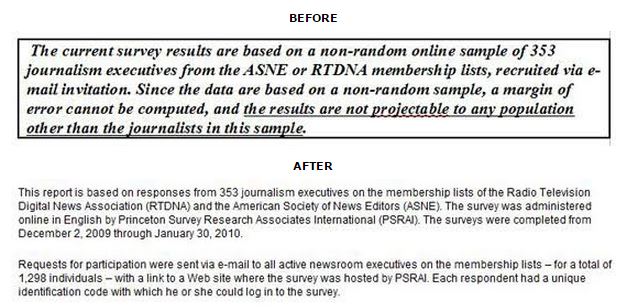

Five days after I inquired about possible changes in the original methodology statement, and more than two weeks after I initially pointed out the inconsistency between it and Rosenstiel’s report, Rosenstiel sent me a revised methodology statement, which has been posted on the Pew website (see accompanying text).

The revised methodology information sent to David Moore by Tom Rosenstiel and posted on Pew’s website as an update.

“Tweaking” is hardly the word I would use to characterize the changes.

In fact, the new methodology statement repudiated the previous one, claiming now that “all” active news executives on the ASNE and RTDNA membership lists had been invited to participate in the survey, making it a scientifically representative sample after all – representing the respective memberships of ASNE and RTDNA respectively.

This is no trivial modification. It’s like describing an apple as “rotten,” until someone notices that indeed it is rotten, and then re-describing it as “fresh.” The apple hasn’t changed, just the description.

Again, this appeared to be the end of the story as far as Pew was concerned. Keeter and Witt had their new methodology statement, which now matched Rosenstiel’s report on the PEJ website, so life was grand.

An “Overabundance of Caution” Led to a “Flawed” Methodology Statement?

For me, the May 18 revised statement seemed to come out of nowhere, because it suddenly claimed that “all” active executives on the lists provided to PSRAI had been invited to participate in the survey.

How did Keeter and Witt know that? Originally, Witt had apparently assumed that all active executives were not included on the list, giving rise to his “non-random sample” statement. What had changed? [Despite various attempts to find out what had changed, this question was never answered. See the “Stonewalling” section below for more detail.]

The attempts to sugarcoat the original decision began with Rosenstiel, who claimed that “In an overabundance of caution, our methodology statement probably goes too far in discounting the extent to which our results can be generalized to the population of editors and executives…Our concern that ASNE’s membership has declined led us to be very conservative in characterizing the survey in the methodology statement.”

Clearly Rosenstiel’s expertise is in journalism, not polling. The recent decline in the size of an organization’s membership would have no impact on whether a sample of members can represent the overall membership of that organization – especially since the pollsters (according to the new methodology statement) had invited all members to participate.

Moreover, the size of ASNE membership would have no impact on whether the RTDNA sample was representative of that organization’s membership. The two organizations are simply not linked. Yet, Witt’s caveat about the non-scientific nature of the sample applied to both ASNE and RTDNA.

While one can overlook Rosenstiel’s comments on sampling, it is more difficult to overlook Keeter’s and Witt’s professional judgment as pollsters.

So, it was very surprising when Keeter wrote: “I’ve conferred with Evans and his colleagues and we all agree that the original methodology statement was flawed because of an overabundance of caution.” [italics added]

Caution about what?

About the declining membership of ASNE making it difficult to say whether the RTDNA sample represented RTDNA?

These professionals would be laughed out of the room if they made such an argument. Which, I guess, they apparently realized.

Several times, I pressed them on this matter.

Did they also believe the overabundance of caution was caused by ASNE’s declining membership? Fortunately for my faith in their expertise, they did not embrace that argument. They simply declined to address it.

My only conclusion was that they felt it sounded better to claim the first methodology statement was “flawed” because they were being overly “cautious,” rather than because they had simply screwed up.

Finally, The Truth Emerges (Sort Of)

But I wasn’t convinced the original statement was a screw-up.

What bothered me was why Witt, who surely knows sampling methodology, would have made such a major mistake in the first place.

After I pressed him for details May 18 about the sampling frame (details that a polling organization is required to produce, according to AAPOR and NCPP standards, if requested), Witt finally acknowledged May 24 that “We were assured that the organizations [ASNE and RTDNA] had obtained email addresses for a substantial majority of their members and provided those to PSRAI.” [italics added]

Aha!

Here was the information I had suspected all along. Although a “substantial majority” of members had provided the organizations with email addresses, that meant a significant number had not done so.

That explained why Witt had originally characterized the sample as non-scientific.

The lists of members of ASNE and RTDNA were incomplete, so he didn’t have access to the whole membership of the two organizations. He couldn’t have invited all active members to participate. Moreover, he didn’t know how many active members were missing from the list or anything else about them.

But in the same email, Witt contradicted his admission that all members were not included, by claiming that all members were included. (That’s what a “census survey” means in his comments below – that a whole group of people, not just a sample, receive the questionnaire.)

“And, in this case, a census of the two membership lists was attempted.”

Apparently, here Witt means that all the members on the lists he had received were invited to participate, but he glosses over the fact that not all members were included on those lists.

The reader wouldn’t know the full truth from the methodology statement, because of the way the information was presented.

Witt went on to justify his first methodology statement: “Describing the results of a census to the general reader is tricky, since such an effort does not rely on a sample in the traditional sense of a general population RDD survey.”

What an admission! He claims that he characterized the sample as “non-random” because he found it too tricky to describe what a census survey is?

One way to describe a census survey would be: “All active executives on the lists were invited to participate.” Yes, that quotation comes from the new methodology statement that Witt and Keeter posted after I raised the issue. Apparently, it’s not that “tricky” to write after all.

Witt continued: “So the original note at the top of the PEJ topline was a short-hand attempt by me to deal with the study design. After you questioned the note, Pew and PSRAI came up with the new note on topline and added the full methodological report that was inadvertently omitted originally from the website.”

My goodness! Here Witt claims the original methodology statement is essentially the same as the new methodology statement – except that the original one was a “short-hand attempt,” while the new one includes a “full methodological report” that was “inadvertently omitted” from the website.

Witt’s original “short-hand” statement said the poll’s sample was non–random, and that therefore “a margin of error cannot be computed,” and “the results are not projectable to any population other than the journalists in this sample.”

The new statement repudiated the non-scientific warning, and indicated the results could be generalized, and provided a margin of error for each sample.

Is “rotten” really short-hand for “fresh”?

No Evidence Presented to Support Claim of Pew’s Revised Methodology Statement

While Witt was trying to claim that there was little difference between the original “short-hand” methodology statement and the “full methodological report” that was now posted on the website May 24, I was pressing him to clarify how he could claim that “all” active executives were included in the sample. Based on his responses cited above, I wrote:

Moore: (May 24):

“Just to be clear: You say you had been assured that ASNE and RTDNA provided you email addresses for a substantial majority of their members, which suggests that you were unable to obtain email addresses for a certain minority of members. You then attempted a census of the members for whom you had email addresses, after removing the names of members who were listed in non-newsroom jobs, including those who had retired. Is my summary above an accurate description of the sample you used?” [italics added]

Witt (May 25):

“I’m happy to let you summarize my answers as you see fit.”

Moore (May 25):

“OK…fair enough.

“You say ‘a census of the two membership lists was attempted,’ but those two lists did not include all the members – because ASNE and RTDNA provided PSRAI ‘email addresses for a substantial majority of their members’ (at least that was the assurance they gave you) – which means many members were not on your list because ASNE and RTDNA did not have their email addresses.

“Since you apparently don’t know anything about the members who are not on your list, nor do you know even how many there are (except the number is somewhere less than 50%), it’s not clear to me how you can claim to have done a census of the active members of ASNE and RTDNA. My interpretation is that you tried a census of active members of ASNE and RTDNA with current email addresses.

“That’s an important qualification that is not in your new methodology statement.

“If I am wrong about this, would you please show me where my interpretation is faulty?”

Witt (May 25):

“It has been crystal clear from the day this research was released that the news executives on the lists were invited by email to participate and that the interviews were conducted online. That does indeed mean that news executives without email were not included. It also means that news executives without internet access were not included. And that does means that members of the ASNE and RTDNA who did not provide email addresses or who do not have internet access were not included.” [italics and underlining added]

Moore (May 26):

“Don’t you think you should change your methodology statement to make it clear that the membership lists you were operating from did not include all members?”

Witt had finally admitted what he had refused to say explicitly all along (but was now claiming it was “crystal clear” from the beginning): ASNE and RTDNA members without email or Internet access were not included in the sample.

Why wouldn’t he and Keeter make that admission on the website itself? Not to do so was a clear violation of any reasonable interpretation of “transparency” or “full disclosure” – and appeared to be a deliberate attempt to deceive the reader.

Non-Supported Methodology Statement Remains on Pew Website for 34 Days

As far as I could tell, Pew had now washed its hands of the matter.

Witt did not respond to my May 26 question about the need for an additional change of the methodology statement to make it clear that only members with email addresses were included in the survey. Although he implied that only a dunce would not have recognized this limit, since it had “been crystal clear from the day this research was released,” he was not willing to inform the Pew reader of that limitation.

So now it seemed as though these two professionals were deliberately deceiving the public about the nature of the sample. They had reason to believe that some unknown number of ASNE and RTDNA members could not be included in their sample (see Witt’s comments above), yet the methodology statement stated that all members “on their lists” had been invited to participate. How was the public to know the full truth?

A week later, with no response from Witt, and in a last effort to find out what Keeter was thinking, I wrote an email June 2 asking if he would talk with me about the matter. He said he was on vacation, but “we are working on getting a few additional details for the methodology statement.” That was news to me.

We did not speak by phone (all correspondence was by email and is included on this link), so on June 17, I wrote again, asking Keeter what those “additional details” might be. Finally, on June 21, Keeter announced:

“At long last we have heard back from both organizations. Both of them say that all active editors and executives of their organization have e-mail addresses and were included in the lists provided to PSRA for sampling. I do not know why we had so much trouble confirming this, since it seems unlikely that journalists in those positions would be unreachable by e-mail. But now we have confirmation that all active editors and executives were included in the lists. Accordingly, the methodology statement currently included with the topline is accurate and we have no plans to modify it.”

So there you have it. Although he didn’t know if the revised methodology statement was accurate when it was posted, finally, “at long last,” some 34 days later, Keeter suddenly produced evidence to support the claim.

A tempest in a teapot, after all. Or so Pew would have us believe.

Excellence in Journalism?

Still, one conclusion is clear, admitted by Pew: Rosenstiel wrote an article that was inconsistent with the methodology statement posted on the website at the time the article was posted. When he wrote the article, he either didn’t read the caveat in the methodology statement, or simply ignored it – neither action a model of excellent journalistic practices.

At the very least, Rosenstiel should have conferred with the pollster if he felt the methodology statement was not accurate – a point that Pew more or less acknowledges [see final section below for details].

Excellence in Polling?

Keeter’s announcement that ASNE and RTDNA now claim that all their members have email addresses raises the nagging question about how the original methodology statement came into being, if – as Witt later claimed – all along they had conducted a census survey of the memberships of ASNE and RTDNA.

The “short-hand” thesis begs to be given a decent burial, as does the explanation about an “overabundance of caution” because of ASNE’s declining membership.

And one can’t help but be troubled by the eagerness with which Keeter and Witt adopted the new methodology statement, even though – according to Witt – the evidence they had at the time suggested it was not correct.

I was concerned about their apparent disregard of the facts they had on hand, and thus wrote to them about my interpretation. I told them I was not asking them for a response, but was giving them an opportunity to respond if they felt my interpretation was wrong:

“Scott and Evans, you both agreed to repudiate the initial methodology statement’s characterization of the sample as ‘non-random,’ though at the time you did so, you didn’t have the evidence to do so. Yes, on June 21, you had ‘at long last’ the evidence you needed to justify your claim, but until then the evidence was lacking. In fact, Evans, in your ‘crystal clear’ email to me (May 25 at 3:46 PM), you conceded that ‘members of the ASNE and RTDNA who did not provide email addresses or do not have internet access were not included.’ You arrived at this conclusion, I believe, because of the assurance you had been given that only a ‘substantial majority’ of members – not all of them – had email addresses. Yet, the new methodology statement on Pew’s website said PSRAI had solicited ‘all’ active members to participate. So, Scott and Evans, at least for 31 days, Pew had on its website a statement that – to the best of your knowledge – was incorrect. [It was actually 34 days, posted 3 days earlier than I thought.]

“As I said earlier, these interpretations are based on what you wrote in the emails. If you think any of my statements above misrepresent what you’ve told me or what actually happened, I would welcome your comments.”

A couple of days later, July 7, Keeter wrote back to tell me, “I have conferred with my colleagues and we have nothing further to add to what we have already told you.”

Stonewalling

The publisher of iMediaEthics, Rhonda Roland Shearer, shared an earlier (very similar) draft of the above article with the Pew Charitable Trust. In turn, the Board Chair of Pew Research Center, Donald Kimelman, asked Scott Keeter to write a response. [The full letter is posted at the end of this section.]

Donald Kimelman, chairman, Pew Research Center (Credit: PewTrusts) |

Of course, we had already heard Keeter’s response, so his new explanation provided nothing he hadn’t already said, but importantly the admissions of errors and the promise to not repeat them, were there for us, if not the public at large [see my emphasis]:

“The study constitutes a valid reading of the views of members of the two largest and most important associations of news executives, ASNE and RTDNA. That was true when the study was originally posted and remains true today. Unfortunately, the methodology of the study was not accurately described in the original report.”

He tempers the inaccuracy of the methodology statement by continuing to claim that the original formulation was intentionally cautious (though in this letter he uses the word “conservative”):

“We try to be conservative in our claims about our methodology, but in this instance the original methodology statement was too conservative.”

This, of course, gives conservatism a bad name. Is it “conservative” to say that white is black, or is it just wrong?

We were wrong because we didn’t do our homework would have probably been the most accurate way to describe what happened.

However, what remains vague in the process is how Evans Witt initially came to believe that only a “substantial majority” of members – not all of them – had email addresses.

He claims May 24 that was the assurance he got from the organizations, but this explanation from Witt is a bit vague. Who told him that a “substantial majority” of members of RTDNA and ASNE had email addresses? Did each organization actually use the exact same terminology of “substantial majority”? That seems unlikely. What precisely did each organization tell him? Why was he so quick to repudiate his own original methodology statement, once I pointed to the discrepancy between it and Rosenstiel’s article? Had he so little confidence in his own work?

(A fact checker at iMediaEthics contacted both ASNE and RTDNA to ask them how many of their members had email addresses. Only ASNE responded. It reported 473 members overall, with 98% having email addresses. This includes 325 “active” members [“active” defined by ASNE], all of whom have email addresses. Witt wrote that ASNE sent him all of their members’ names [367], and they all had email addresses. Witt then removed 14 names from the list to arrive at 353 “active” members [“active” defined by him]. He said that those he removed were either retired or they listed their current positions in a “non-newsroom job.” It’s not clear why there are the discrepancies between what ASNE reported to us and what Keeter says ASNE reported to him.)

From a professional point of view, by far Keeter’s and Witt’s most questionable actions were to announce and post a revised methodology statement that they could not justify. Though Witt believed at the time that only a “substantial majority” – not all – of the two organizations’ members had email addresses, their new methodology statement said that all members were reachable by email. How did they, with honesty, arrive at that conclusion?

In his letter of explanation to Board Chair Kimelman, Keeter writes:

“In reviewing the study after an inquiry from David Moore, it was clear to me that this was, in fact, a representative survey of the membership of these two organizations.”

Despite persistent questioning, he has never explained why it was “clear” to him that it was a representative survey, when it certainly hadn’t been clear to Witt. Keeter then asserts:

“A census of the memberships of the two organizations was attempted.”

That statement borders on falsehood. At the time Keeter posted the original statement, claiming a census of the memberships, he did not know whether it was true or not (and Witt’s information suggested it was not true). As Keeter later wrote in the letter to Kimelman:

“The one issue we had trouble resolving was whether all members of the associations were reachable by e-mail or not, as the revised methodology statement said. I thought the truth of this would be simple to ascertain, but it took multiple inquiries over the course of four weeks to get a definitive answer….” [emphasis added]

Thus, it is clear: For four weeks, he did not know whether the revised methodology statement was true or not. (And the only information Witt had suggested the statement was not true.)

Separately, Keeter also appears to acknowledge in an email June 21 that it was only my continued questions that prompted him even to try to resolve the issue at all.

It does make one wonder, of course, if this is Pew’s secret modus operandi, or just an aberration – to post unverified information on its website, and only later try to verify it if pressed by a critic?

Of course, it must be gratifying to Pew that all seems to have worked out in the end – the sample is what Pew’s polling professionals claimed it to be (albeit the second time around), thus appearing to exonerate the author of the article who had paid no attention to the sample limits as initially described.

In any case, the Pew Charitable Trust stands by its experts, according to Donald Kimelman, board chair of the Pew Research Center. He wrote to Rhonda Shearer that Keeter’s statement of explanation “reflects the views not just of the center’s leadership, but its parent organization and principal funder, the Pew Charitable Trusts.”

|

E-mail from Donald Kimelman to Rhonda Shearer and David Moore : Dear Ms. Shearer and Mr. Moore: Thanks for contacting us about your upcoming article on the Pew Research Center’s study of news executives. We reviewed the article and the voluminous correspondence over the past two months and asked Scott Keeter, director of survey research at the center, to draft a summative response. I’m attaching that response, as it reflects the views not just of the center’s leadership, but its parent organization and principal funder, the Pew Charitable Trusts. Thanks for giving us the opportunity to comment. Donald Kimelman Managing Director of Information Initiatives, the Pew Charitable Trusts Board chair, the Pew Research Center. Scott Keeter’s response: “Earlier this year the Pew Research Center’s Project for Excellence in Journalism undertook a survey of news executives to shed light on how journalism’s leaders are dealing with the challenges facing the industry. The study constitutes a valid reading of the views of members of the two largest and most important associations of news executives, ASNE and RTDNA. That was true when the study was originally posted and remains true today. Unfortunately, the methodology of the study was not accurately described in the original report. “The underlying issue is that the original methodology statement said that the survey was based on a non-representative sample. In reviewing the study after an inquiry from David Moore, it was clear to me that this was, in fact, a representative survey of the membership of these two organizations, which include news executives serving most of the news audience in the U.S. A census of the memberships of the two organizations was attempted, and the response rates were acceptable given current experience with elite surveys. Moreover, we saw no evidence of bias in the obtained sample. Accordingly, I proposed that we modify the methodology statement to reflect that fact. Our contactor, PSRAI, agreed with my interpretation and provided a new methodology statement which we posted. “We try to be conservative in our claims about our methodology, but in this instance the original methodology statement was too conservative. We should have gotten the methodology statement right the first time, and are taking steps to ensure that does not occur again. “In addition, there was one question about the corrected methodology statement that, for a variety of reasons, required longer for us to resolve than we would have liked. After the revised methodology statement was posted, David raised additional questions, which we answered, as the e-mail traffic between us reflects. The one issue we had trouble resolving was whether all members of the associations were reachable by e-mail or not, as the revised methodology statement said. I thought the truth of this would be simple to ascertain, but it took multiple inquiries over the course of four weeks to get a definitive answer: all members were, in fact, reachable by e-mail. “The PEJ survey of news executives is an important contribution to our understanding of the difficult environment facing the news business today. We are confident about the validity of the study and its conclusions.” |

Hoodwinking the Public

iMediaEthics’ publisher, Rhonda Shearer, wrote to Donald Kimelman, Board Chair of Pew Research, and asked him if a “correction” would finally be posted on Pew’s website – since the polling professionals had changed their methodology statement without transparency for the public.

The change had been posted on May 18, but almost two months later, there was still no official acknowledgment on Pew’s website about making an error or fixing it.

On July 16, Keeter replied that PEJ (Pew’s Project for Excellence in Journalism) had now noted the methodology change on its website.

At the bottom of the Pew methodology report, a footnote indicates that the methodology statement has been “updated.”

To be blunt, the footnote is a model of disingenuousness, designed to hoodwink – not inform – the public.

It says: “This methodology statement was updated on May 18, 2010, after query from an outside publication led the Pew Research Center, Princeton Survey Research Associates International and the Project for Excellence in Journalism to review the sample in this survey and determine that it was more robust than originally described.” [italics and underline added]

This double-speaks suggests that after an inquiry from an “outside publication” (aka iMediaEthics), Pew’s polling professionals discovered that not only was there no problem, but the sample was even better (“more robust”) than they had originally thought.

The truth is their methodology statement wasn’t “updated.” It was corrected. Ongoing events are updated. Sports scores are updated. Mistakes are corrected.

Their original methodology statement was corrected, because it was wrong. And egregiously so, if we accept Witt’s original and subsequent descriptions and explanations at face value. At best, it was sloppy work – which Pew has de facto repudiated.

Moreover, for these professionals to say they determined the sample was “more robust” than originally described is to imply it had some robustness in the original description. But – according to Witt – it had none. It couldn’t be generalized to any larger population. The second version says it can be used to generalize to the overall memberships of both ASNE and RTDNA.

This poll and method statement are supposed to be guided by science. If a geological expedition had described a newly discovered rock as black, when in fact it was white, could it follow Pew’s lead and just “update” the description by saying the rock was in fact “whiter” than originally described? That isn’t science; it’s nonsense.

Worse, this is hardly the “transparency” that Keeter and Witt, as top leaders in AAPOR and NCPP, are pushing for other polling groups. And, it’s hardly a model of excellence that we have come to expect of Pew.

George F. Bishop is Professor of Political Science and Director of the Graduate Certificate Program in Public Opinion & Survey Research at the University of Cincinnati. His most recent book, The Illusion of Public Opinion: Fact and Artifact in American Public Opinion Polls (Rowman & Littlefield, 2005) was included in Choice Magazine’s list of outstanding academic titles for 2005 (January 2006 issue).

David W. Moore is a Senior Fellow with the Carsey Institute at the University of New Hampshire. He is a former Vice President of the Gallup Organization and was a senior editor with the Gallup Poll for thirteen years. He is author of The Opinion Makers: An Insider Exposes the Truth Behind the Polls (Beacon, 2008; trade paperback edition, 2009). Publishers’ Weekly refers to it as a “succinct and damning critique…Keen and witty throughout.”

* * * * * * *

Now Posted on Yahoo News, “iMediaEthics’ New PollSkeptics Report”