Pew Research Center logo

In a recent Pew report, “What 2020’s Election Poll Errors Tell Us About the Accuracy of Issue Polling,” the authors argue that even large election poll errors don’t signal serious accuracy problems with polls about public policy issues.

As one would expect from the experts at Pew, the report is a master class of objective analysis and reasonable caution about the limits of polls. Still, the conclusions are a bit of a red herring.

I say “a bit” of a red herring, because some critics (here, here, and here, for example) have concluded that election poll errors in 2020 do indeed undermine our confidence in the accuracy of issue polling. And to those critics, the Pew report provides a compelling rebuttal.

But, in general, it’s a red herring to link the accuracy of election polling to the validity of public opinion on public policy issues. The real problem with issue polls is quite different from the issue with election accuracy. And it’s a problem that pollsters recognize, though one they are quite happy to ignore.

Challenges of Issue Polling

The major problem is that on most public policy issues, large segments of the public are unengaged. Most pollsters simply ignore that fact. Instead, they ask questions using a forced-choice format, which produces ridiculously high percentages of Americans who seem to have an opinion on almost any topic, no matter how complicated or arcane.

Moreover, the opinion that is measured using the forced-choice format is highly susceptible to small differences in question wording, often leading to large contradictory findings by different pollsters.

Despite Pew’s reassurance about the accuracy of issue polling, Pew is a major perpetrator (along with most media pollsters) of this “manufactured” public opinion.

Here is an article I wrote four years ago after an exchange with Michael Dimock, Director of the Pew Research Center:

In his response to my article critical of a Pew poll, Michael Dimock, Director of the Pew Research Center, acknowledges that I raise a “legitimate point,” that “one major challenge to measuring public opinion is that people may not always know what you are asking about.”

I raised this point, because Pew reported that a majority of Americans opposed “prohibiting restaurants from using trans fats in foods.” Pew didn’t report how many people even knew the difference between fats and trans fats. I suggested that many people who were opposed to getting rid of trans fats may, in fact, have been thinking of just “fat,” not realizing that trans fats are artificial and dangerously unhealthy.

While Dimock concedes my point was a legitimate criticism, he responds that it’s simply impractical in general to find out whether people know what they’re talking about.

“Practically speaking, any given survey asks respondents about multiple people, issues and policies that they may or may not be familiar with – preceding each with a knowledge test would put a substantial burden on the interview and the person being surveyed.”

Let’s think about that comment for a moment.

Dimock is arguing that pollsters have so many questions in a survey, they don’t have time to find out whether people know anything about any of the issues included in that survey. According to this reasoning, reporting people’s opinions on subjects with which they have little or no knowledge is perfectly fine. And it’s not necessary to warn the reader, or (in this case) The News Hour, that public ignorance may be widespread.

Moreover, by using forced-choice questions (which do not offer the respondent an explicit “don’t know” option, and thus pressure respondents to give an opinion even if they don’t have one), the pollster can give the illusion that virtually everyone has an informed and meaningful opinion about almost any issue. (Pew reported 96% of Americans with an opinion on trans fats, though Pew had no idea how many people know what trans fats are.)

The use of forced-choice questions is the preferred modus operandi among pollsters, who recognize that it leads to often highly contradictory results.

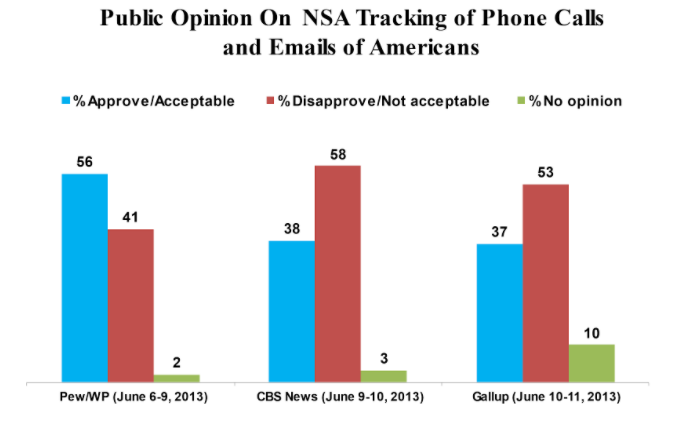

That recognition is illustrated by the results of three polls in 2013 about the discovery that the National Security Agency (NSA) engaged in tracking massive numbers of Americans’ email and phone records. The polls came up with wildly contradictory results. Pew reported that the public was greatly in favor of the tracking efforts, while Gallup and CBS said the public greatly opposed them.

What’s interesting about this case is not just the wildly different results, but pollsters’ reactions to the differences. They reveal that pollsters and media reporters recognize that the “opinion” generated by most media polls is not necessarily reflective of what the public really thinks.

Gallup’s Frank Newport, for example, opined that the 31-point swing in results between what Pew reported (a 15-point margin in favor) and what Gallup reported (a 16-point margin against) “is not a bit surprising.” Why? He notes:

“The key in all of this is that people don’t usually have fixed, ironclad attitudes toward many issues stored in some mental filing cabinet ready to be accessed by those who inquire. This is particularly true for something that they don’t think a lot about, something new, and something that has ambiguities and strengths and weaknesses.”

Huffington Post’s Mike Mokrzycki reinforced that view when he cited academic studies suggesting that “attitudes about privacy are not well formed,” which means that the “opinions” measured by polls are highly influenced by question wording and question context (the questions that are asked before the policy question).

That all sounds reasonable – that many people simply hadn’t made up their minds about the issue, and thus didn’t have meaningful opinions. So, if that was the case, how did all the pollsters report that virtually all Americans (90% to 98%) had opinions on the issue? Shouldn’t the pollsters have told us that, say, 40% to 50% of Americans hadn’t thought about the issue or didn’t have clearly formed opinions?

And that’s the nub of the problem with issue polling. It’s not whether the poll results are within a few percentage points of the “real” public opinion. It’s that those results are flaky at best, highly influenced by small differences in question wording, which conceal the high level of public disengagement that actually exists.

Instead of telling us the truth about the public, pollsters and the news media insist on using forced-choice questions that pressure people, even those who “don’t have fixed, ironclad attitudes,” to come up with some attitude anyway. So, the poll results make it appear as though virtually all Americans have such attitudes. And in the process, the opinions measured by one polling organization can differ widely from what another one produces.

The issues of NSA or trans fat issues are not isolated. I’ve noted numerous examples over the years when pollsters arrive at highly contradictory findings, differences that make election polling errors seem trivial. Other recent examples can be found on healthcare, the government shutdown, and the fairness of our federal tax system. Last month, I showed why we should be skeptical of the Global Affairs Poll reported by the Chicago Council.

We shouldn’t be reassured by Pew’s analysis showing that election polling errors have a small impact on issue polling. Yes, the analysis reassures those who are concerned about the presumed linkage. But for those concerned about the general distortion of public opinion caused by forced-choice questions, focusing on election polling accuracy is a red herring.